Covariant CEO and Cofounder, Peter Chen sat down with Marina Lenz, our Otto Group customer, to discuss how the Covariant Brain, and the first foundation model for robotics is increasing warehouse efficiency, building better resilience to labor market challenges and improving the quality of job that exist within warehouse facilities. Otto Group, the largest online retailer of European origin, is investing in performance and innovation – beginning with the deployment of 120 Covariant Brain-powered robotic solutions to their logistics network. Peter and Marina discuss overcoming the challenges of building robots that can “see,” “think,” and “act,” why warehouses are a highly complex environment for robots, and how Covariant’s universal AI platform is influenced by three of our cofounders' years working at the early days of OpenAI.

Marina Lenz, Otto Group: In 2017, Peter Chen, cofounded Covariant together with three of his peers to apply their cutting-edge AI research to solve real-world problems. Previously, Peter was a PhD at UC Berkeley, specializing in AI and robotics. He then worked at OpenAI, as did two of his Covariant co-founders. At the time, OpenAI was a very young organization without its own office but the influence of that experience is still visible in Covariant’s work today. Hi Peter, thanks so much for joining the podcast and taking the time for the interview. Peter, Covariant: It’s great to be here. Marina: You’re one of the world's leading experts in AI and, of course, the technology is very widely discussed but to me, it seems like many terms are mixed together. AI, Chatbots, Machine Learning, Deep Learning, and so on. I even found articles discussing whether or not ChatGPT is to be considered an AI. So from your expert point of view, what makes a technological solution intelligent? Peter: I would like to first comment on the fact that the confusion is normal because the technology is being developed at such a rapid pace that even for people who are doing research in the field, that are behind this technological revolution, find the pace hard to keep up with. And so when you have such a technological change happening at such a rapid pace, confusion is normal. This is very much an expected question. In terms of what is really the core of intelligence, and what makes a system something that we would call AI, I typically think of it as performing some type of cognitive task. What does that mean? That is a distinction from mechanical calculations, like what does one plus one equate to? That is a mechanical calculation. What is a more cognitive task? What is an object in an image? What kind of object is it? What can you do with it? All of these things require a certain degree of understanding, reasoning, or making a decision on something, that type of cognitive task is what we would consider that an intelligent system can perform. But obviously, there is a huge breadth of cognitive tasks that can be performed by AI, and within each task there are varying degrees of depth you can go into. And how good you are at doing that. But that becomes a much broader topic, as intelligence is a very broad topic to discuss.

Marina: Robots have been part of the working world for quite some time now, for instance in the automotive and the logistics industries. Yet, they seem pretty limited to performing very specific types of tasks, especially the picking of items is often still performed by humans. So what makes it so difficult for this to be done by robots?

Peter: We have a lot of robots in our lives today. We have a lot of automation equipment, in automotive, in different kinds of manufacturing, and logistics. But all of those robots and automation equipment are largely performing repetitive tasks, meaning the motion they perform is the same. They repeat the same thing, again, and again. So through clever mechanical and electrical engineering, we have built machines that are really good at that, repeating the same thing again and again at a very high accuracy and very high repeatability. But what we have not been able to solve yet is making those robots flexible. Making them have the ability to perform different kinds of motion and do things differently when it is presented with a different environment. And that lack of flexibility is what makes a lot of these picking tasks still unsolved by robotics because for most of these picking tasks, you need to do things differently every time even when you are just handling one fixed kind of object. Where does it appear? And in what orientation does it appear in front of the robot? All of this changes how a robot needs to interact and pick up that item.

Marina: What other challenges or are there other challenges in the context of logistics and intelligent robots? Peter: I would say there are two layers to that question. The first layer is, are there challenges and opportunities that AI can solve in the context of logistics and warehouses? And the answer is there are a lot of them. I would say anything that is about data analytics, that is about forecasting, that is about planning, data can inform AI to make better decisions in those things. So I would say outside the domain of robotics there is definitely a lot of room for AI to play in the realm of logistics and warehouses. Specifically for robotics in warehouses, the future is very broad. So we have talked about picking in particular, but picking is only a small part of what robots can do in a warehouse. So after you pick up an item, you can inspect whether it is damaged or not. After you inspect it, you can provide barcode scanning. Then you can do sortation, you can do packing. And we are just talking about fulfillment or call it the forward logistics. We have still not talked about the reverse logistics, like how do you deal with returns and process those types of items. And so there are many of these types of manipulation tasks that are not just about picking but picking is a subset of it. There is a large variety of tasks that can be performed by AI-powered robots in a warehouse. And that is the potential that we see, we see the potential for AI-powered robots to go into warehouses today and start performing some of the, call it simpler type of picking tasks. But that is really just the beginning of what an AI-powered robot-based future of warehouses looks like and we would expect to see lots of robots performing more kinds of repetitive tasks that now require an associate.

Marina: Covariant developed the Covariant Brain, which enables robots to “see,” “think,” and “act.” How does that work? What does that mean?

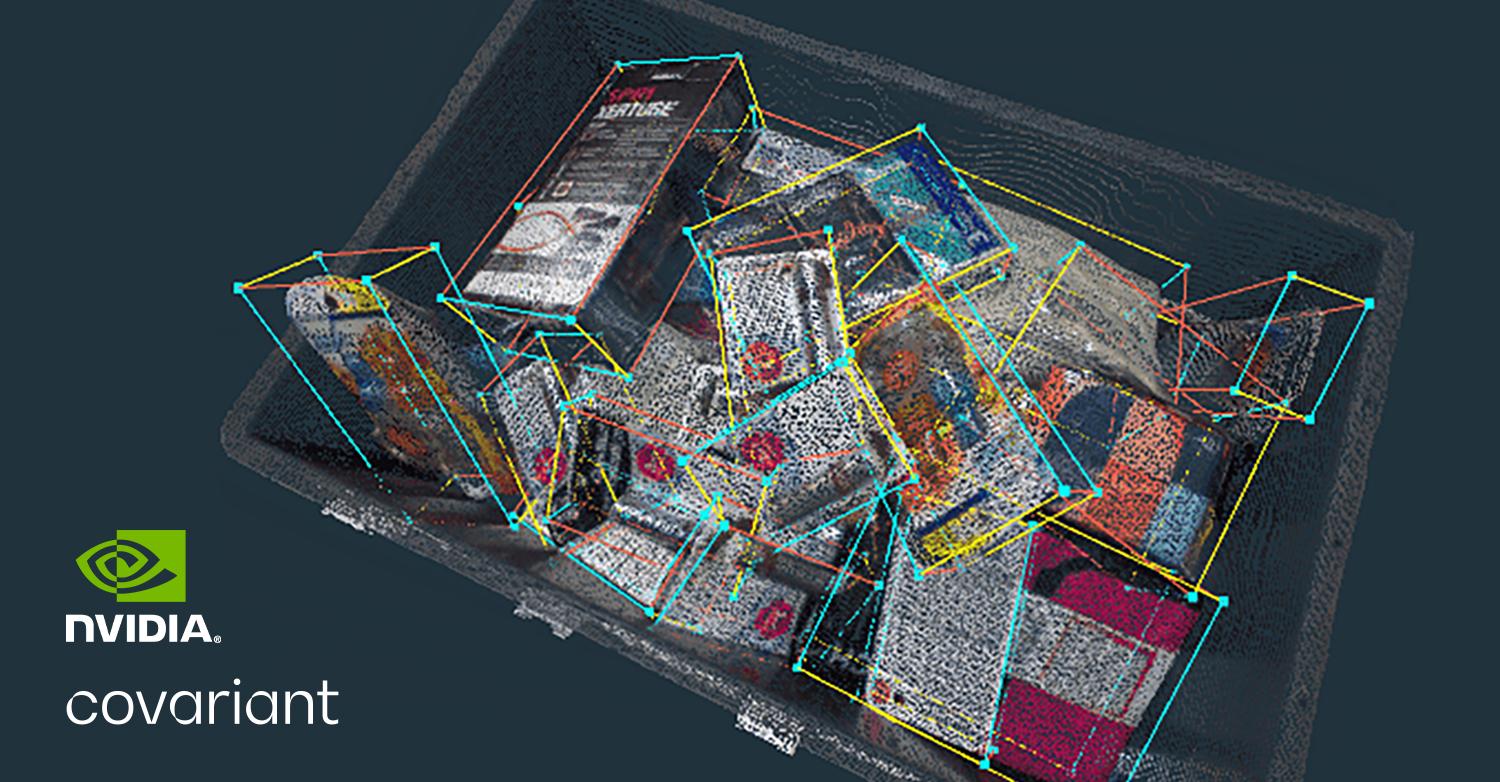

Peter: This comes back to the initial question we were discussing which is what is considered intelligence. Intelligence is about not just performing certain mechanical tasks or repeating something but is the ability to handle something that is new. That requires a little bit of thinking. In the case of the Covariant Brain, it is a foundation model for robotics. What does that mean? It's an AI model that is trained on vast amounts of robot data. It aggregates robot interactions with the world, from robots across multiple continents to robots in different kinds of warehouses, handling different kinds of items. All of this data creates one general AI system that gives us the ability to look at the world and understand it very much like how a human would. Understand the world in 3D, so I know where I should go to grab a certain item. It will understand object semantics, like which is the object I should pick up? Which is the object I shouldn’t pick up? It will understand physical affordances, like how an object can be interacted with. What is a stable way to grasp something? What is not a stable way to grasp something? And it also has the ability to reason about movement in our physical world. Like how do you move an item so you do not drop it? So you can transport it successfully, so you would not damage it and you would not collide with anything. So all of these somewhat subconscious abilities that we have as human beings to interact with the world are the type of things that we are recreating inside the Covariant Brain. And that AI has that ability, just like a human would, to understand the world in 3D, understand objects, understand affordances, understand motion, and then endow those capabilities to actual physical systems to give it the ability to move and react intelligently to what’s in front of them. Marina: That’s very interesting and probably also a good bridge to my next question because you and two of your cofounders previously worked at OpenAI and then started your own company. It would be very interesting to hear how this affects the way you now approach the problems of intelligence and intelligent robots in the logistics industry and the challenges there. Peter: This is a really good question. There was definitely a really profound influence of OpenAI on Covariant. So a little bit of a background story, two of my co-founders, Professor Pieter Abbeel, a UC Berkeley professor in Robotics, as well as our CTO, Rocky Duan, the three of us were very early members of OpenAI so when we joined OpenAI. In fact, we didn’t even have an office back then. We were working out of Greg Brockman, OpenAI CTO’s own condo so that’s kind of how early it was. But even very early on, OpenAI had this core technology thesis that is, in order to build a general AI system, you need to build a data-defined system. So when we talked about Artificial Intelligence in the past, there were many many kinds of artificial intelligence that existed. So for example, back in the 90s you had a computer that could beat a human champion in the game of chess. That's a form of intelligence and that is pretty smart. But if you look behind it, the intelligence is built by human ingenuity, a human teaching the computer program how you should play chess, giving it the rules of chess, giving it some heuristics on here is what you should do, here is what you shouldn’t do, and then play it out for a couple more steps. So definitely impressive intelligence but not data-defined intelligence because you are programming intelligence into a computer system. That I would call the first generation of classical AI. They are an intelligent system but they are not data defined. How smart they are totally depends on how smart the programmer is. When you look at this more recent phenomenon of ChatGPT, as well as the Covariant Brain, it represents a new generation of AI that embodies this philosophy of really data-driven systems. What we mean by that is most of the intelligent behavior that the system exhibits comes from the data, as opposed to coming from the engineers, the researcher, the program systems. And this is a drastic shift in paradigm. In the past, classical AI was only as good as how you program it and it would never exceed that, no matter how long the AI interacts with the world. Versus now, the AI can actually exhibit behavior that the people who design it don’t even know themselves because the AI is learning from data on itself. It goes beyond the limitation of whoever designs and programs the system, it also has the ability to continue to learn because when you have a system that is purely data-driven, when you have more data, it continues to improve and learn from it.

This idea of a very radically data-defined, data-driven AI is the philosophy that OpenAI had since the beginning and also has been the philosophy that we have since the beginning of Covariant. Now Covariant has robots across three continents and all of these robots are all connected together and all of their learnings are shared and contribute to a single Covariant Brain. No one else on the planet does that. There might be other robotic systems out there but all the other robotic systems learn in isolation. One robot might have its own brain and its own data but they are not connected together, they do not share together. So this philosophy is definitely heavily influenced by our time at OpenAI and this philosophy actually now has a name attached to it. It actually didn’t have a name, a couple of years ago, when we were still at OpenAI. This is now called a foundation model, this is coined by a couple of researchers that stem from University.

Now that we have this term, we can say a lot of the core OpenAI philosophy is on building a foundation model, and the same for Covariant. A lot of the core philosophy is on building a foundation model for robotics. I will really say that is the primary influence. I think there are a lot of small interesting, more anecdotal influences that we have, for example, we use a lot of the same interview questions when we interview AI scientists. Just because that’s where a lot of the team came from and have inherited a lot of the ideas. Marina: That is really cool. Recently some very well-known Silicon Valley founders and personalities have called for stopping the development of AI that is smarter than GPT-4, due to the risks that are associated with the technology. What is your take on this and do you consider Covariant’s AI to be smarter than GPT-4? Peter: So maybe let me take the second part of the question, Do I consider Covariant AI, the Covariant Brain to be smarter than GPT-4? The answer is yes and no. Yes in the sense that the Covariant Brain is trained on a lot more robots interacting with the world, especially in a logistics setting, than GPT-4. GPT-4 is largely trained on data from the internet but you can’t find that much data on the internet about the physical world, especially about warehouses. Let alone robot interactions within warehouses, because of that GPT-4 is actually not that smart in a logistics warehouse, especially in a robotics setting. So as we discussed, GPT-4 and the Covariant Brain are foundation models, they are as good as their data. On the other hand, GPT-4 has seen a whole lot more data about many other different things, so for example, if we want to translate a paragraph from German to English, then GPT-4 is much much better. Going back to the first part of your question, on the recent call to stop the development of models that are more capable than GPT-4, so when you look at the letter, the core idea behind that call is not necessarily about how the technology can be misused but it is about existential risk. Like this so-called singularity moment, when you build an intelligence system that is smarter than a human, then if a human can build that intelligence system that is smarter than a human, then the system that is smarter than a human can improve itself faster. And there is this so-called singularity moment and this so-called hard-take-off scenario where intelligence improvement speed would be so fast that it would quickly get out of control. That is kind of the core existential risk that people are pointing out, associated with training bigger models than GPT-4. From a scientist's perspective, the risk exists theoretically, but existential risks exist for many other things. They exist in our world, I would say if you put an actual likelihood on it, I don’t think it's actually that likely. I would instead ask people to focus attention on how the technology can be misused, just like any powerful technology there are ways to misuse them and if this large language model with this generative AI, misinformation is definitely a big part of how this technology can be misused. So I would urge people to think more about how these types of technologies can already be misused without training larger, bigger models and how to mitigate that while still harnessing their power. Instead of focusing time on the existential risk of humanity taken over by AI. Marina: Let’s have a look into the broader future. Let’s imagine Covariant has mastered the logistics industry and created perfectly trained, smart robots that can pick and place just fine. Could these robots survive in another environment than a warehouse? Would they truly be intelligent? And if so, what is next for Covariant and the Covariant Brain? Peter: This is a great question. Some of the assumptions behind the questions might indicate logistics and warehouses are a rather simple environment but that’s actually not true.

Marina: That is really cool. Recently some very well-known Silicon Valley founders and personalities have called for stopping the development of AI that is smarter than GPT-4, due to the risks that are associated with the technology. What is your take on this and do you consider Covariant’s AI to be smarter than GPT-4? Peter: So maybe let me take the second part of the question, Do I consider Covariant AI, the Covariant Brain to be smarter than GPT-4? The answer is yes and no. Yes in the sense that the Covariant Brain is trained on a lot more robots interacting with the world, especially in a logistics setting, than GPT-4. GPT-4 is largely trained on data from the internet but you can’t find that much data on the internet about the physical world, especially about warehouses. Let alone robot interactions within warehouses, because of that GPT-4 is actually not that smart in a logistics warehouse, especially in a robotics setting. So as we discussed, GPT-4 and the Covariant Brain are foundation models, they are as good as their data. On the other hand, GPT-4 has seen a whole lot more data about many other different things, so for example, if we want to translate a paragraph from German to English, then GPT-4 is much much better. Going back to the first part of your question, on the recent call to stop the development of models that are more capable than GPT-4, so when you look at the letter, the core idea behind that call is not necessarily about how the technology can be misused but it is about existential risk. Like this so-called singularity moment, when you build an intelligence system that is smarter than a human, then if a human can build that intelligence system that is smarter than a human, then the system that is smarter than a human can improve itself faster. And there is this so-called singularity moment and this so-called hard-take-off scenario where intelligence improvement speed would be so fast that it would quickly get out of control. That is kind of the core existential risk that people are pointing out, associated with training bigger models than GPT-4. From a scientist's perspective, the risk exists theoretically, but existential risks exist for many other things. They exist in our world, I would say if you put an actual likelihood on it, I don’t think it's actually that likely. I would instead ask people to focus attention on how the technology can be misused, just like any powerful technology there are ways to misuse them and if this large language model with this generative AI, misinformation is definitely a big part of how this technology can be misused. So I would urge people to think more about how these types of technologies can already be misused without training larger, bigger models and how to mitigate that while still harnessing their power. Instead of focusing time on the existential risk of humanity taken over by AI. Marina: Let’s have a look into the broader future. Let’s imagine Covariant has mastered the logistics industry and created perfectly trained, smart robots that can pick and place just fine. Could these robots survive in another environment than a warehouse? Would they truly be intelligent? And if so, what is next for Covariant and the Covariant Brain? Peter: This is a great question. Some of the assumptions behind the questions might indicate logistics and warehouses are a rather simple environment but that’s actually not true.

So in that perspective, AI that is trained and has shown success in warehouses should have the ability to interact with the broader world. And I am choosing the word very carefully here because there is an AI challenge and there is also a robotics challenge. The reason I say that is, that even when you have an AI that has learned how to handle every single object in the world, that does not mean you have the physical body that is right for the rest of the work. Let’s say I want to build a household robot and I am giving it an AI that understands how to interact with anything that exists in your home because it has seen it all in a warehouse. The ability to have the Covariant Brain to solve that AI problem, I have a lot of confidence about. But whether we have the ability to build a physical mechanical robot that is cost-effective in a home setting, that becomes entirely different. I would say that the same philosophy would basically guide the Covariant Brain and how Covariant expands to additional domains. It is basically evaluating whether the physical body makes sense for a certain industry. But then there are a lot of industries where that would make sense, for example, a large chunk of manufacturing is still manual, and agriculture, especially when it comes to picking and handling of goods is largely manual. And the service industry is still largely manual, restaurants and commercial kitchens are still extremely manual and all of these places have sufficient industrial scale to make building purpose-specific robotics hardware worthwhile. So those would be places we expand into, but then as hardware costs come down, we definitely would expect Covariant Brain-powered robots to go into a consumer setting as well.