Andrew Sohn, Anusha Nagabandi, Carlos Florensa, Daniel Adelberg, Di Wu, Hassan Farooq, Ignasi Clavera, Jeremy Welborn, Juyue Chen, Nikhil Mishra, Peter Chen, Peter Qian, Pieter Abbeel, Rocky Duan, Varun Vijay, Yang Liu

Watch Covariant's demos to view an overview of RFM-1's language and physics capabilities.

The last century has brought us transformative technological advancements, from computers and the internet, to advanced computing, and now artificial intelligence. These advancements have influenced every field of study, every industry, and every facet of human life.

At Covariant, we believe that the next major technological breakthrough lies in extending these advancements into the physical realm. Robotics stands at the forefront of this shift, poised to unlock efficiencies in the physical world that mirror those we've unlocked digitally.

Recent advances in foundation models have led to models that can produce realistic, aesthetic, and creative content across various domains, such as text, images, videos, music, and even code. The general problem-solving capabilities of these remarkable foundation models come from the fact that they are pre-trained on millions of tasks, represented by trillions of words from the internet. However, partly due to the limitation of their training data, existing models still struggle with grasping the true physical laws of reality, and achieving the accuracy, precision, and reliability required for robots’ effective and autonomous real-world interaction.

RFM-1 — a Robotics Foundation Model trained on both general internet data as well as data that is rich in physical real-world interactions — represents a remarkable leap forward toward building generalized AI models that can accurately simulate and operate in the demanding conditions of the physical world.

Foundation model powered by real-world multimodal robotics data

Behind the more recently popularized concept of “foundation models” are multiple long-standing academic fields like self-supervised learning, generative modeling, and model-based reinforcement learning, which are broadly predicated on the idea that intelligence and generalization emerge from understanding the world through a large amount of data.

Following the embodiment hypothesis that intelligent behavior arises from an entity's physical interactions with its environment, Covariant’s first step toward this goal of developing state-of-the-art embodied AI began back in 2017. Since then, we have deployed a fleet of high-performing robotic systems to real customer sites across the world, delivering significant value to customers while simultaneously creating a vast and multimodal real-world dataset.

Why do we need to collect our own data to train robotics foundation models? The first reason is performance. Most existing robotic datasets contain slow-moving robots in lab-like environments, interacting with objects in mostly quasi-static conditions. In contrast, our systems were already tasked with working in demanding real-world environments with high rates of precision as well as performance. In order to build robotics foundation models that can power robots to achieve high rates in the real world, the training data has to contain robotic interactions in those demanding environments.

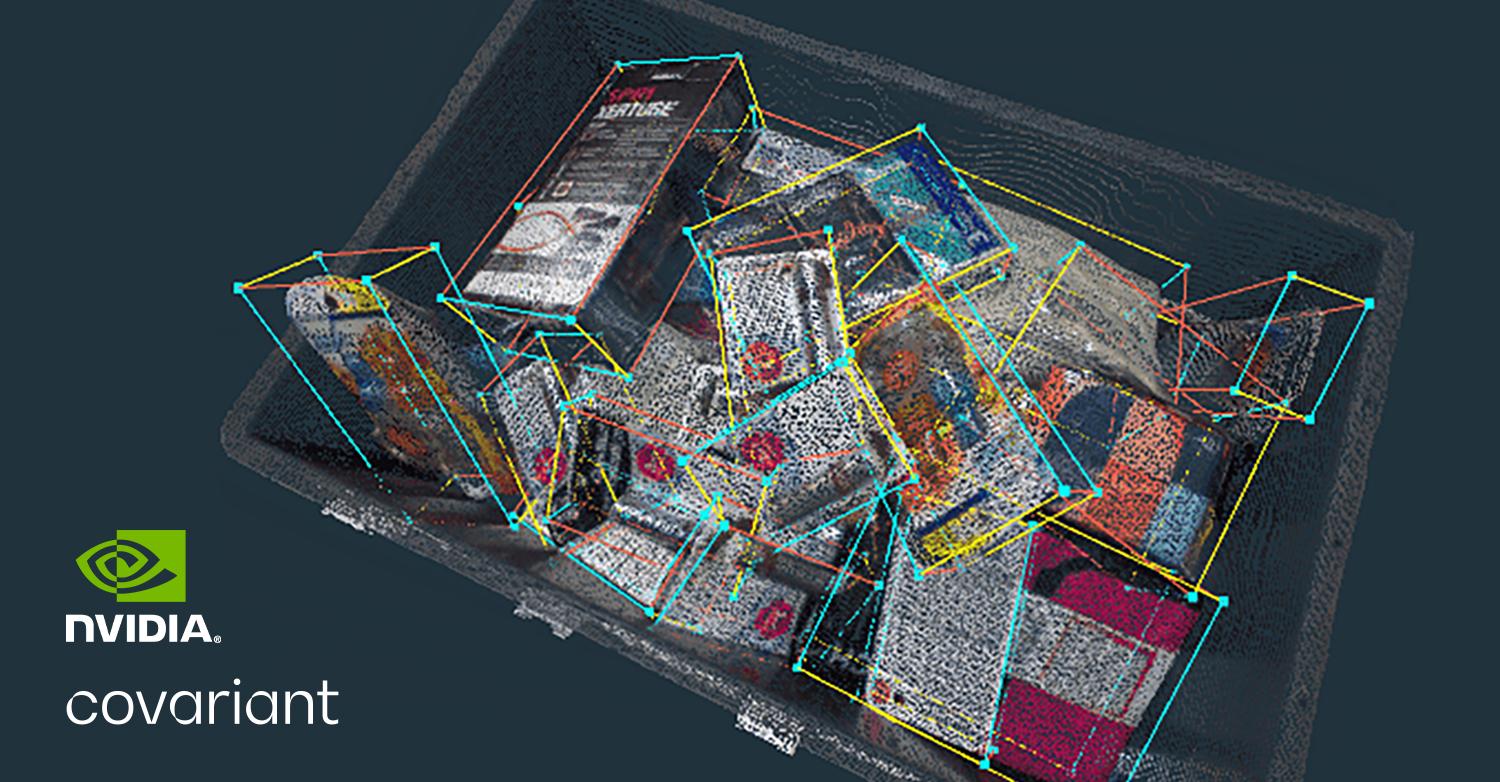

Our systems have been manipulating deformable objects, handling high occlusions, reasoning about the varying suction dynamics across materials, dealing with the chaos of irregularly shaped items in motion, and handling a wide array of objects varying from makeup and clothes to groceries and mechanical parts.

The resulting multimodal dataset mirrors the complexity of deploying systems into the real world, and is enriched with data in the form of images, videos from various angles, station and task descriptions, sensor data from motor encoders and pressure sensors, and various forms of quantitative metrics and results.

The second reason that we need to build our own robotics dataset is that a truly robust understanding of the physical world comes from encountering many rare events and making sense of them. Through operating in the ever-changing warehouse environments 24/7, our systems uncover these long-tail events that are hard to encounter in lab-like environments.

Starting with a wide variety of operations in the warehouse automation space, our new generation of AI (RFM-1) showcases the power of Robotics Foundation Models. Our approach of combining the largest real-world robot production dataset, along with a massive collection of internet data, is unlocking new levels of accuracy and productivity in warehouse applications, and shows a clear path to expanding to other robotic form factors as well as broader industry applications.

What is RFM-1

Set up as a multimodal any-to-any sequence model, RFM-1 is an 8 billion parameter transformer trained on text, images, videos, robot actions, and a range of numerical sensor readings.

By tokenizing all modalities into a common space and performing autoregressive next-token prediction, RFM-1 uses its broad range of input and output modalities to enable diverse applications.

For example, it can perform image-to-image learning for scene analysis tasks like segmentation and identification. It can combine text instructions with image observations to generate desired grasp actions or motion sequences. It can pair a scene image with a targeted grasp image to predict outcomes as videos or simulate the numerical sensor readings that would occur along the way.

We will now dive deeper into RFM-1’s capabilities, specifically in the areas of physics and language understanding.

Understanding physics through learning world models

Learned world models are the future of physics simulation. They offer countless benefits over more traditional simulation methods, including the ability to reason about interactions where the relevant information is not known apriori, operate under real-time computation requirements, and improve accuracy over time. Especially in the current era of high-performing foundation models, the predictive capability of such world models can allow robots to develop physics intuitions that are critical for operating in our world.

We have developed RFM-1 with exactly this goal in mind: To deal with the complex dynamics and physical constraints of real-world robotics, where the optimization landscape is sharp, the line between success and failure is thin, and accuracy requirements are tight, with even a fraction of centimeter error potentially halting operations. Here, the focus shifts from merely recognizing an object like an onion, to managing its precise and efficient manipulation, all while minimizing risks and coordinating with other systems to maximize efficiency.

RFM-1’s understanding of physics emerges from learning to generate videos: with input tokens of an initial image and robot actions, it acts as a physics world model to predict future video tokens.

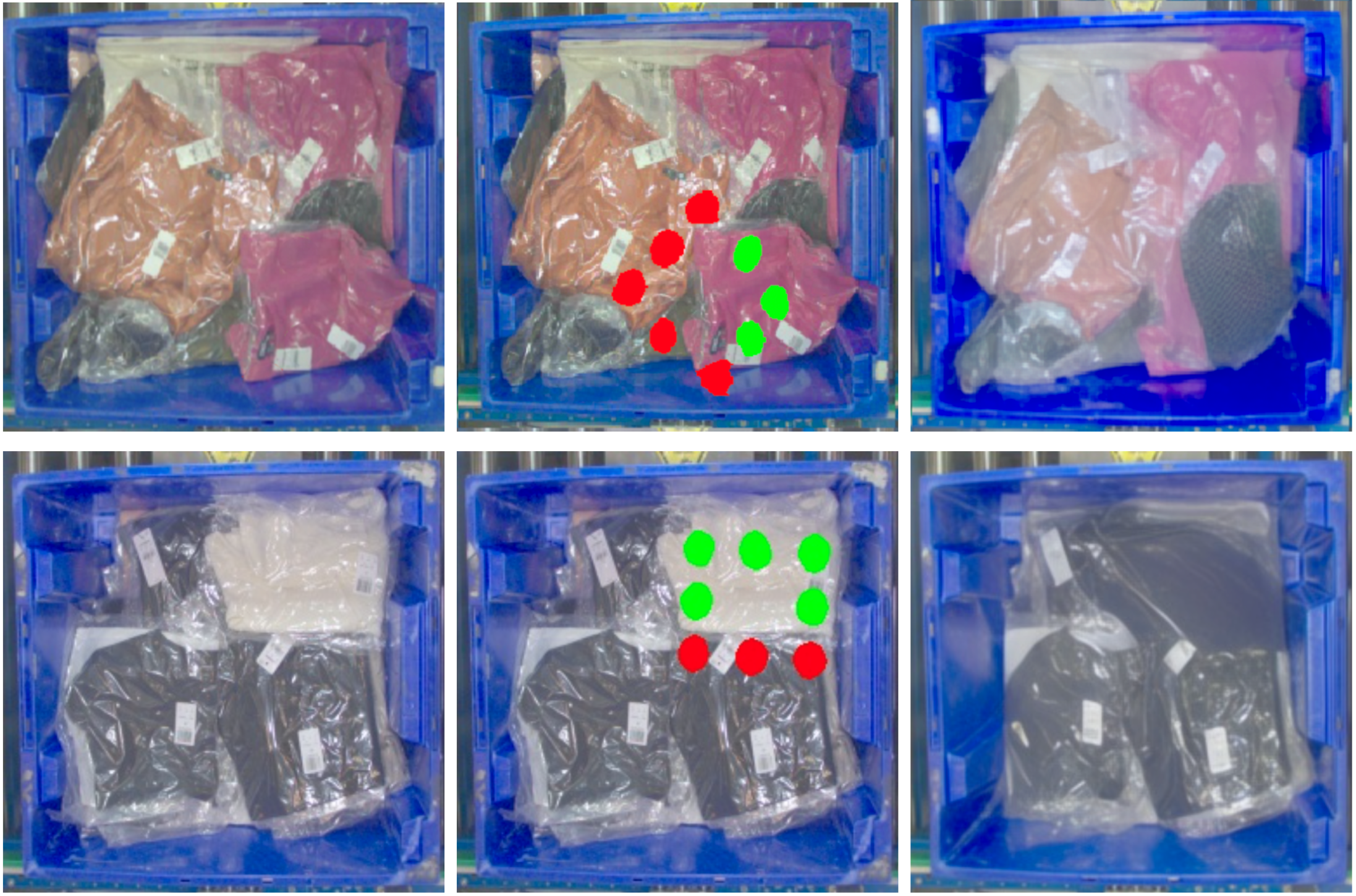

The action-conditional video prediction task allows RFM-1 to learn a low-level world model, simulating how the world will change every fraction of a second. Sometimes it’s more efficient to just predict the high-level outcome of a robot action. RFM-1 naturally provides this kind of high-level world model as well, thanks to our structured multimodal datasets and RFM-1’s flexible any-to-any formulation. In the following example, RFM-1 directly predicts how the bin in front of the robot will change a couple seconds into the future as a result of a prescribed robot’s grasp action, acting as a high-level world model.

These examples show that the model understands the prescribed robot actions and can reason about whether those actions will be successful and how the content of the bin will change purely from next-token prediction.

These high-fidelity world models can be used for online decision-making through planning, as well as for offline training of other models and policies. Prior works like AlphaGo indicate that planning in a world model will likely be crucial to achieving super human-level performance. Furthermore, the physics understanding that emerges from these world modeling tasks directly strengthens other capabilities of RFM-1, like the ability to map images to robot actions.

Leveraging language to help robots and people collaborate

For the past few decades, programming new robot behavior has been a laborious task for experienced robotics engineers only. RFM-1’s ability to process text tokens as input and predict text tokens as output opens up the door to intuitive natural language interfaces, enabling anyone to quickly program new robot behavior in minutes rather than weeks or months.

Language-guided robot programming

RFM-1 allows robot operators and engineers to instruct robots to perform specific picking actions using plain English. By allowing people to instruct robots without needing to re-program, RFM-1 lowers the barriers of customizing AI behavior to address each customer's dynamic business needs and the long tail of corner case scenarios.

RFM-1 doesn’t just make robots more taskable by understanding natural language commands, it can also enable robots to ask for help from people. For example, if a robot is having trouble picking a particular item, it can communicate that to the robot operator or engineer. Furthermore, it can suggest why it has trouble picking the item. The operator can then provide new motion strategies to the robot, such as perturbing the object by moving it or knocking it down, to find better grasp points. Moving forward, the robot can apply this new strategy to future actions.

Limitations

RFM-1 is just the beginning of our journey of building foundation models for general-purpose robotics, and there are currently several limitations that we will address in our ongoing research initiatives to scale up and to innovate on algorithmic fronts.

First of all, despite promising offline results of testing on real production data, RFM-1 has not yet been deployed to customers. We already have firsthand experience of how RFM-1 can bring value to existing customers and expect to roll out RFM-1 to them in the coming months. By deploying RFM-1 to production, we also expect the collected data to help target RFM-1’s current failure modes and accelerate RFM-1’s learning.

Limited by the model’s context length, RFM-1 as a world model currently operates at a relatively low resolution (~512x512 pixels) and frame rate (~5 fps). Although the model can already start to capture large object deformations, it cannot model small objects / rapid motions very well. We are excited about continuing to scale up the model’s capacity. Additionally, we observe a strong correlation between world model prediction quality and the amount of data available. We expect to scale up our data collection speed by at least a factor of 10 through the robots coming into production soon.

Finally, although RFM-1 can start to understand basic language commands to locally adjust its behavior, the overall orchestration logic is still largely written in traditional programming languages like Python and C++. As we expand the granularity of robot control and diversity of tasks via scaling up data, we are excited about a future where people can use language to compose entire robot programs, further reducing the barrier of deploying new robot stations.

RFM-1: Launching pad for billions of robots

RFM-1 is the start of a new era for Covariant and Robotics Foundation Models.

By giving robots the human-like ability to reason on the fly, RFM-1 is a huge step forward toward delivering the autonomy needed to address the growing shortage of workers willing to engage in highly repetitive and dangerous tasks — ultimately lifting productivity and economic growth for decades to come.

Interested to learn how RFM-1 can increase the reliability and flexibility of your fulfillment network? Contact us.