Each year, NVIDIA hosts GTC (GPU Technology Conference), AI conference, uniting the brightest minds in the field to unveil breakthrough discoveries. The GTC 2024 spotlight shone brightly on robotics, with notable unveilings such as Covariant's RFM-1 (Robotics Foundation Model) and NVIDIA Robotics’ Project GR00T (Generalist Robot 00 Technology), aimed at establishing a foundational model for humanoid robots.

We are highlighting key moments from Peter Chen’s panel at GTC, featuring leading robotics experts at the forefront of developing the latest generative AI-based technologies in robotics. Dive into the panel insights into the development and deployment of commercial robotics.

What does it mean to build an AI that can solve 90% of all robot tasks in the world?

- Defining this was difficult from a purely research perspective

- Controlled lab environments and academia focus on theoretical solutions, not real-world products and customer needs

- Transitioning to dynamic operational settings exposes the actual tasks and use cases a generalized robotic AI must handle

The path to achieving truly general AI lies in deploying robotics within the complexities of real-world environments. It is only by tackling diverse customer challenges in production environments, that we can amass the critical data and experiences necessary to push the boundaries of generalized intelligence.

What data is necessary for general robotic intelligence?

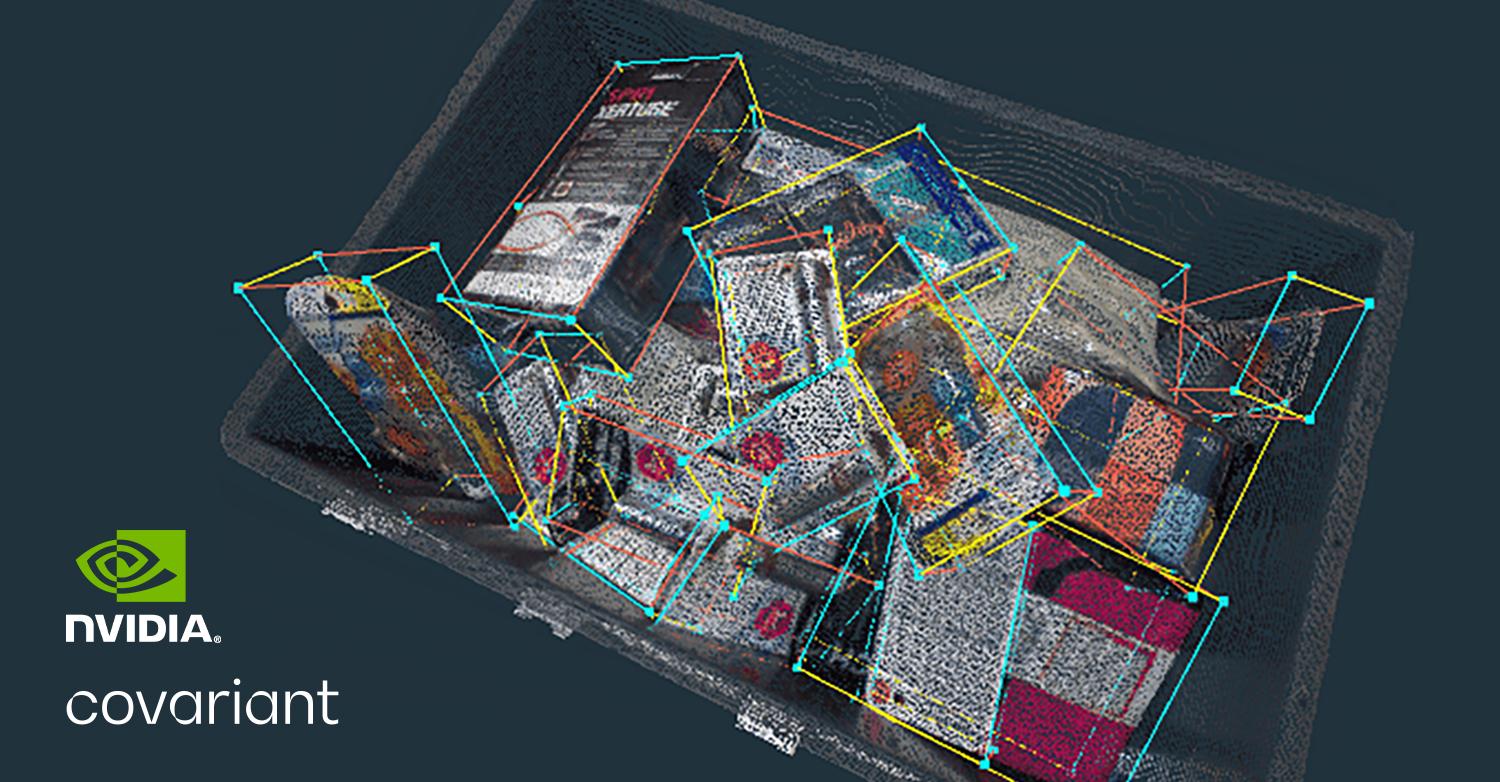

A Robotics Foundation Model cannot be trained on internet or lab data alone, real-world data is the key to achieving the speed, reliability, and accuracy needed to deliver robotic systems that can understand how to manipulate an unlimited array of items and scenarios autonomously.

This demands training data that spans multiple modalities – text, visuals, videos, robot actions, and a wide range of physical measurements or sensor readings – much like the rich sensory experiences and multimodal nature of human learning.

The visual data must comprise actual production footage, images, and measurements that capture the intricacies and dynamics of real-world environments. The visual foundation is reinforced by recordings of robotic actions and their consequent impacts within these environments, alongside text data unlocking human communication and instruction capabilities. Ultimately, this immense amount of multimodal real-world data grounds a foundation model’s understanding of the world in physical reality.

What does it mean to train robots with generative AI?

- GPT (Generative Pre-trained Transformer) is a versatile general intelligence model pre-trained on extensive amounts of data to perform various tasks.

- In the case of large language models in the digital world such as GPT-4, Gemini, and Claude, 'generative' refers to training the model to predict the next token based on text from the web.

- For robotics, this means training models on vast datasets of physical interactions to build generalized AI models that can accurately simulate the physical world.

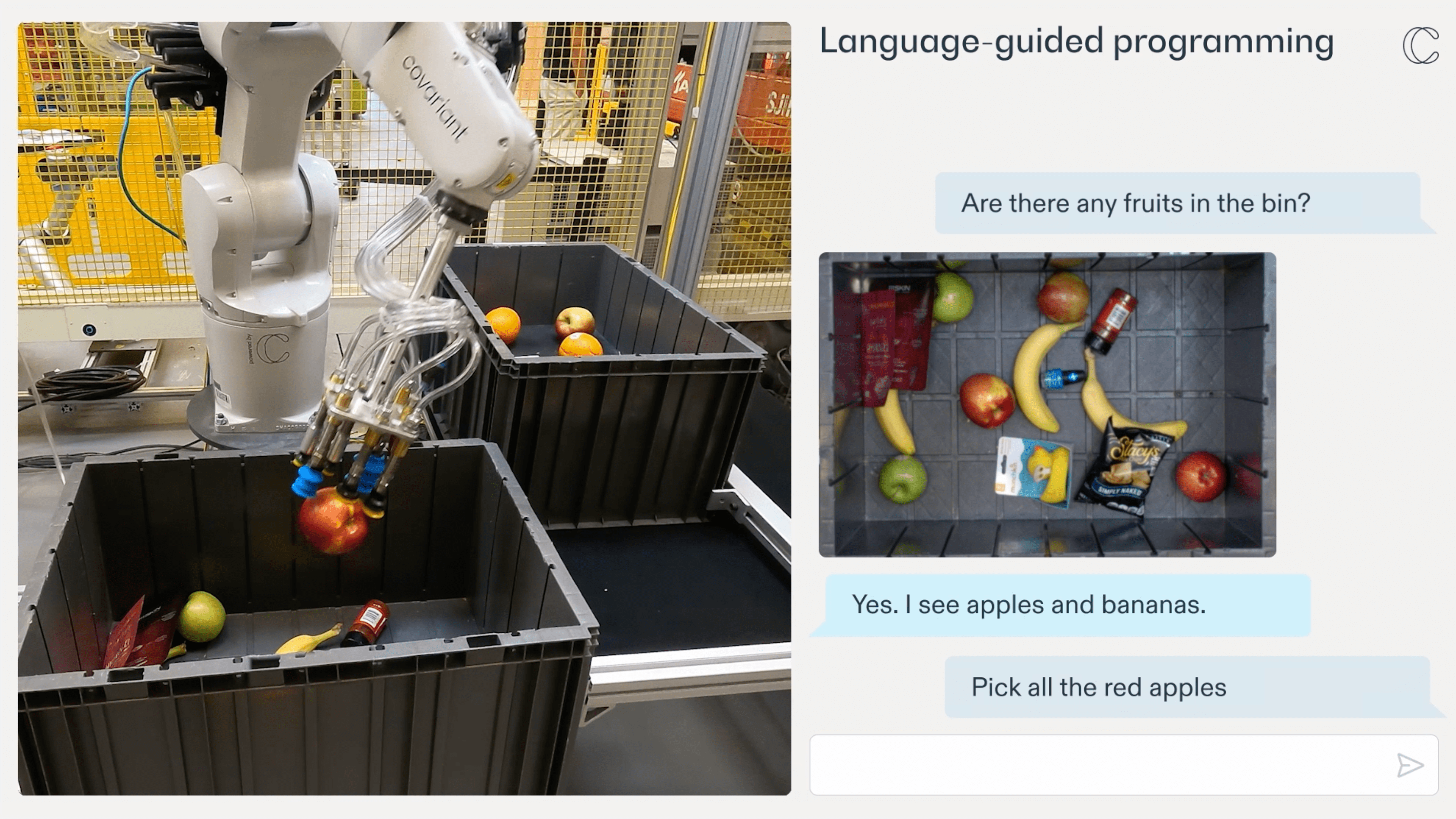

- Our large-scale real-world data and unique team have enabled us to build RFM-1, the first commercial Robotics Foundation Model. RFM-1 is set up as a Multimodal Any-to-Any Sequence Model trained on text, images, video, robot actions, and physical measurements to autoregressively perform next-token prediction.

- Tokenizing all modalities into a common space, RFM-1 can understand any modalities as input and predict any modalities as output.

- This foundation model approach allows us to solve many new tasks across various robotic form factors and industries, – from warehouse operations like picking and packing to more general-purpose robotic use cases like folding laundry.