After being in stealth mode for 2+ years, we’re excited to finally launch Covariant to the world today and share the story of why we founded the company, our journey so far, and our vision for the future of AI!

Founding

When we founded Covariant in 2017, recent advances in AI were starting to allow robots to learn remarkable skills from their own experiences. We had fun teaching robots to learn to stand up, run, pick up apples, and even fold towels. There was one catch: those robots were always in simulated or lab environments. Would it be possible to take this progress beyond the lab and achieve similar breakthroughs in the real world?

To answer this question, it’s worth first considering what had been driving the rapid progress in AI: a combination of (i) experiences for the AI to learn from and (ii) research breakthroughs in AI architectures that could absorb ever larger amounts of experience. When done successfully, the result is AI that’s exceptionally good at solving the problem to which it’s been exposed.

Reflecting on the point of experiences, it became clear to us that for robots to achieve their full real-world potential, they must also learn in the real world: they must interact with the limitless range of objects with which we humans also interact and they must perform the limitless number of tasks that we perform.

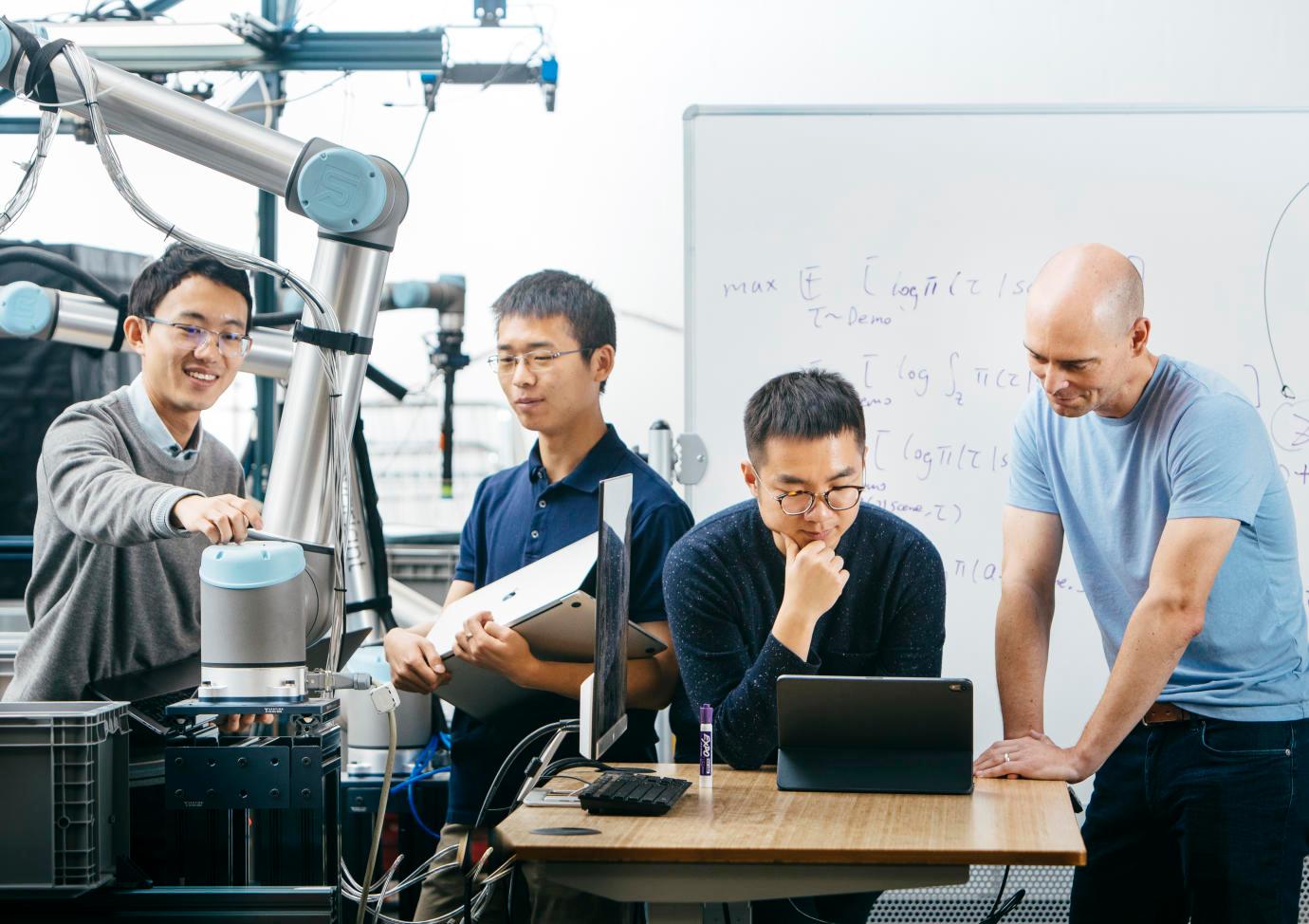

Reflecting on the point of architectures, it became clear that we needed to build a substantial AI research team. Real-world data is much more diverse than lab data. We needed to build fundamentally new architectures to learn from such data.

So, in early 2018, we embarked on our journey to start exposing robots to the real world and researching new AI architectures that could internalize those experiences (much richer than typical lab experiences).

The Promises & Challenges of Logistics

We met with hundreds of leaders from a wide range of industries to understand how they see the role of AI Robotics. From there, we concluded that the entry point to the real world for AI Robotics is in logistics.

Warehouses and distribution centers, where hundreds of millions of products are picked, packed, and shipped every day, are an ideal learning environment. In contrast to self-driving technology or manufacturing, the cost of a “learning mistake” is more tightly bounded, so AI can be introduced early, even while it’s still learning.

At the same time, warehouses provide a very rich environment for robots to interact with. It’s very common to have tens of thousands, hundreds of thousands, or even millions of different types of items in storage (called Stock Keeping Units, or SKUs), and these SKUs are always changing.

The Promises & Challenges of Logistics

We met with hundreds of leaders from a wide range of industries to understand how they see the role of AI Robotics. From there, we concluded that the entry point to the real world for AI Robotics is in logistics.

Warehouses and distribution centers, where hundreds of millions of products are picked, packed, and shipped every day, are an ideal learning environment. In contrast to self-driving technology or manufacturing, the cost of a “learning mistake” is more tightly bounded, so AI can be introduced early, even while it’s still learning.

At the same time, warehouses provide a very rich environment for robots to interact with. It’s very common to have tens of thousands, hundreds of thousands, or even millions of different types of items in storage (called Stock Keeping Units, or SKUs), and these SKUs are always changing.

Research on the Covariant Brain

The millions of SKUs in a warehouse provide a wide diversity of learning opportunities but also require a new kind of AI architecture, an architecture that can internalize experiences as it interacts with limitless combinations of items. In order for an architecture to absorb that amount of experiences, it can’t be limited by simplistic assumptions.

What is an example of such simplistic assumptions? Typical 3D cameras, which give a robot spatial understanding of the world, assume that objects are not transparent, meaning you can’t see through them. This is fine for a lot of objects, such as boxes or parcels. But something as common as a water bottle would break that assumption and appear invisible to any existing 3D camera.

Lifting the curtain a bit on our technology, at Covariant we decided to forsake off-the-shelf 3D cameras altogether, because no matter how many water bottles or other transparent objects we’d show them, they simply don’t have a mechanism to learn to see them.

So how does our robot find the bottle? Our vision system is inspired by human vision: we understand the scene through a combination of what our eyes tell us and a lot of past experience.

That’s one example of the decisions we have been making. We’ve always opted for long-term performance over the accepted shortcuts that will ultimately limit how much the AI can learn from its experience. Building this type of flexible AI with minimal engineer-imposed assumptions is how we spent the last two years of R&D.

Future

In the past two years, rather than taking any specialized shortcuts for any particular segment, we have been working hard on a single Covariant Brain that can power all deployments. This single Covariant Brain will act as a flywheel: as the Covariant Brain is starting to demonstrate its value, more experiences are collected, more learning happens, and the flywheel spins faster, yielding ever-faster improvements across all robots. Today, these robots are all in logistics, but there is nothing in our AI architecture that limits it to logistics.

In the future, we look forward to further building out the Covariant Brain so it can power even more robots in industrial-scale settings, including manufacturing, agriculture, hospitality, commercial kitchens and eventually, people’s homes. And we believe mankind will benefit immensely from those smart robots. They will relieve humans from tedious, repetitive, injury-prone, and dangerous work. They will help those of us with disabilities to live more independently. They will raise the standard of living for all of us.